Behind the Flowers: Part 1

Hand drawn animation, AI, and my collaboration with "The Furrow."

To start off my 2025 bookings, I had the privilege of working with my friends at “The Furrow,” A top notch animation, design, and interactive studio. Due to their expert level organization and immaculate vibes, we blazed through our main assignment and had time for a few side quests.

We ended up creating “Flowers,” a short printed loop:

See The Furrow’s Instagram for the full video with sound design by Sonosanctus. While it’s a short little loop, a lot of thought, exploration, and enthusiasm went into this piece. Initially, we used the animation as a way to explore incorporating AI into our pipeline, without sacrificing human ideas. So let’s talk about how that went:

Our Intention With AI

We wanted to create something with a hint of spring, while experimenting with a machine learning/AI texture generator called “EBsynth.”

I know, it’s “AI.” It’s scary. But I’d like to add some nuance here:

AI is not a disembodied force that consciously replaces human labor. Corporations are doing that. Corporate greed is to blame for our jobs being replaced with AI. I encourage artists to remember that distinction. And to remember that AI can be another tool to accent our ideas, not to replace them completely.

Our aim was to push the tool to accent our own original ideas, not to replace human work. In addition, we wanted to investigate if EBsynth could be a worthwhile production tool.

EBsynth Basics

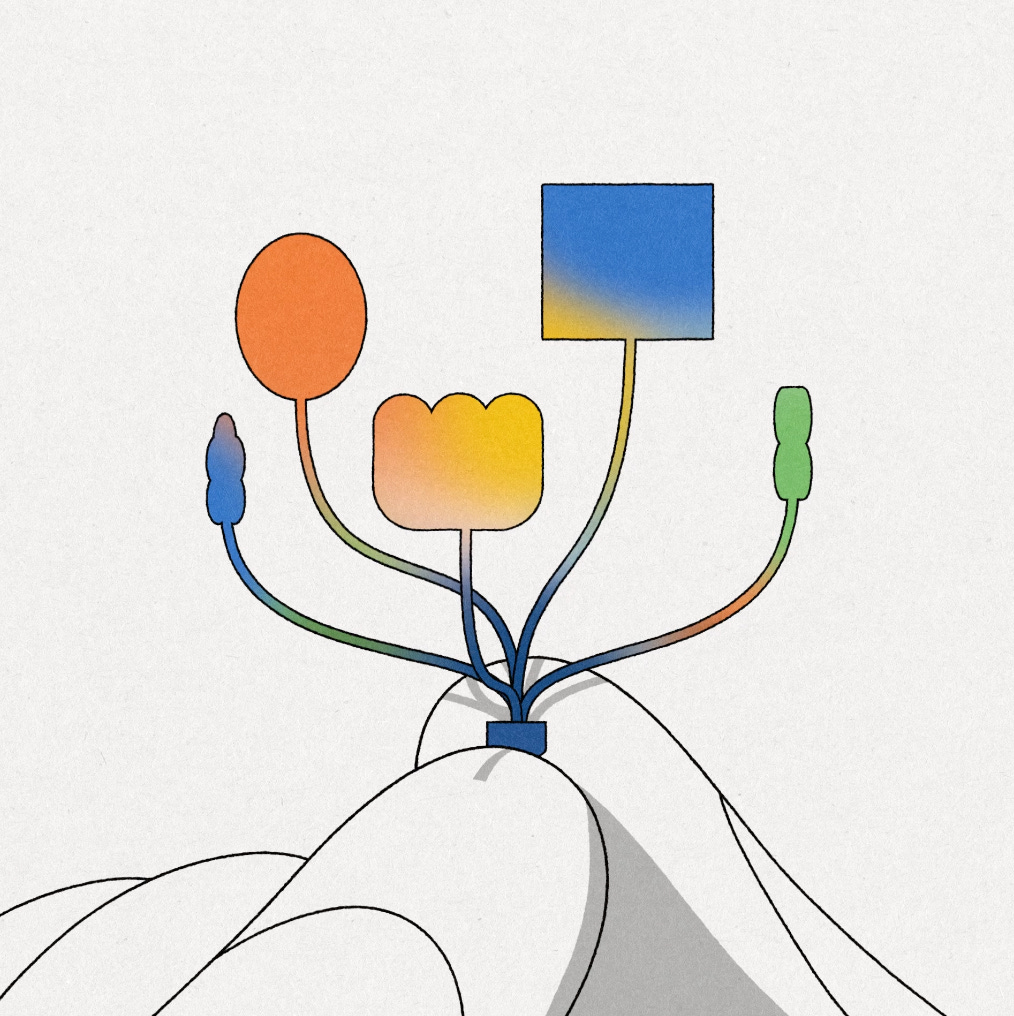

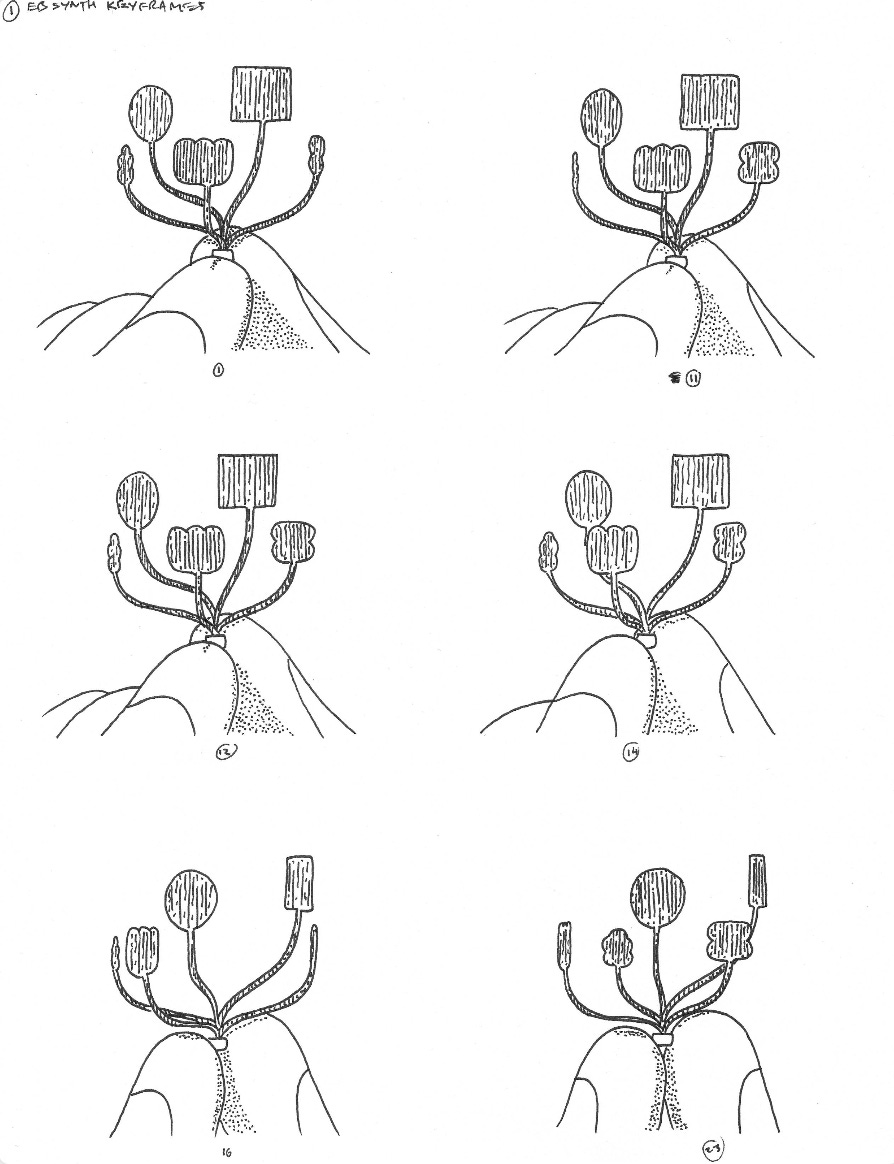

So how does it work? EB synth takes an image sequence (a series of images that are stored together and displayed in order to create the illusion of motion), input design keyframes, and renders a new image sequence that applies those keyframe styles. Example below:

For hand-drawn animation, this has some amazing potential. For the right situation, maybe EB synth could save you a clean up pass! So why not try and apply this to a larger sequence? Therein lies our experiment.

Designing The Exploration

On the topic of spring, I thought “flowers,” pretty quickly. But how do I turn a more cliché spring idea into something more unique? I tried pushing the flowers into more abstract territory, playing with how 2D shapes could move in a more 3D way.

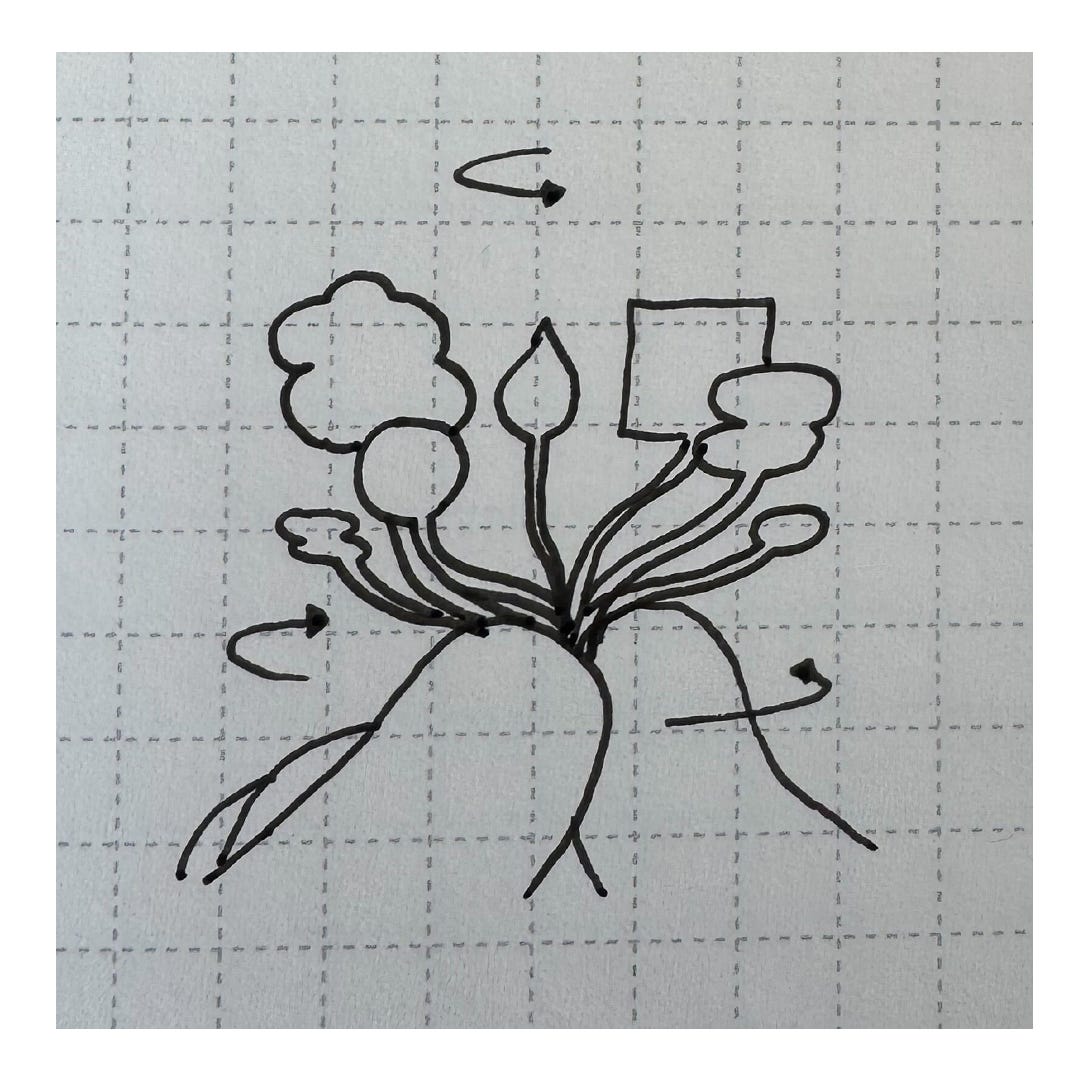

I did some tests in both sketch and animation form, trying to get a more specific feeling for the motion.

Seth (Owner and ECD at The Furrow) wanted to add something more to this and I agreed. While the 2D rotation idea was cool, we didn’t have much grounding the idea yet.

Seth’s feedback shifted me towards adding a more human element interacting with the flowers. I like pushing scale, so I thought about a gardener turning a tiny vase of flowers between their fingers.

This felt more resolved as a concept, so we moved forward. With a solid design direction, I roughed out the animation in Animate CC, cleaned it up in After Effects, and exported an image sequence for EBsynth to process.

Printer vs. AI

If you’ve followed my work at all, you know I love combining print making and animation. So I wanted to make a printed version of this animation (regardless of the AI exploration) and see if EBsynth could create a hand drawn look as well.

I’ll admit, I could have been more scientific about our experiments. However, my goal was to push the limits of the tool. I knew it could handle a simple loop, but I wanted to add more elements for it to deal with. So I set up a very convoluted process which I’ll break down step by step:

I exported the image sequence from After Effects at 8 frames per second.

I imported the image sequence into photoshop and had the software automatically lay out the images as contact sheets.

Using my light table, I lined up a piece of graph paper on top of each contact sheet and drew stylistically different keyframes for EBsynth to process. I chose which keyframes to draw out based on important moments in the sequence.

I focused on keyframes where the flowers overlapped or became flatter. EBsynth would need that information to piece together the animation.

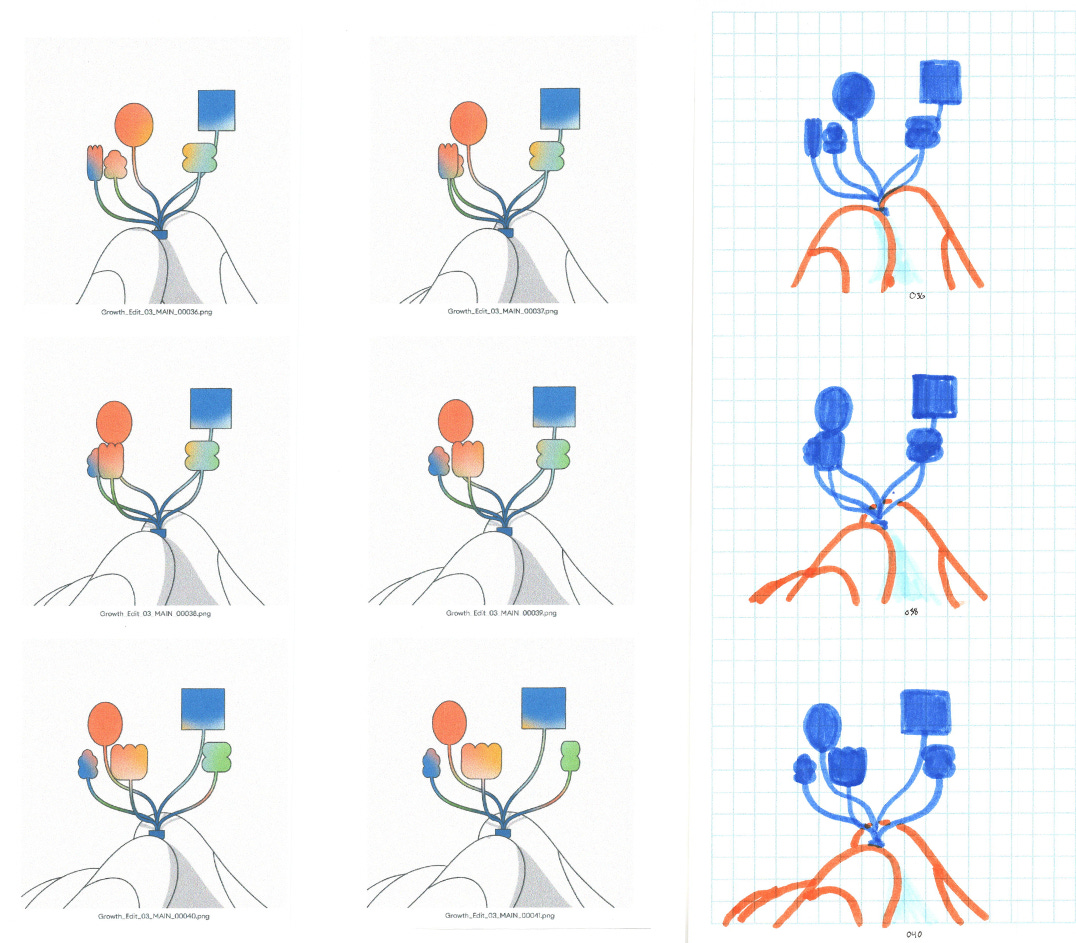

I ran the image sequence, and the input keyframes through EBsynth. The results were…cool? Strange? Crunchy? Deep fried? You can almost see the technology struggling to process all the information. The graph paper definitely broke EBsynth a bit.

This was a dense experiment. If you’re having trouble wrapping your head around this or just want to talk, feel free to leave a comment and I’ll see if I can clear anything up.

To Conclude: Big Brain Thoughts

In my humble opinion, true printed texture is hard to replicate without…well…printing it. Perhaps someone more patient with After Effects could do it through a series of adjustment layers and texture overlays, but that’s not always how I want to work. The printing and scanning process is about catching small moments and compiling imperfections. Mimicking that digitally feels conceptually empty to me.

Eb synth can be a great animation tool for the right situation. Ultimately, the flowers loop presented too many “new” moments in the sequence, which the software had a hard time with. Perhaps adding more keyframes in less dense styles would have worked? Or maybe even re-designing every other frame in the sequence, which would be half the work overall. Ultimately that’s still time saved. But at that point, you might as well do it all yourself right?

Thanks to The Furrow for letting me mess around with this! I hope our exploration provides an interesting perspective on AI in animation.